Source: https://github.com/tolson-vkn/pifrost

Intro #

This project starts with the external-dns project. I would assume that most kubernetes practitioners deploy or have deployed external-dns.

If you haven’t the aim of the project is:

ExternalDNS allows you to control DNS records dynamically via Kubernetes resources in a DNS provider-agnostic way.

ExternalDNS does this by providing interfaces into many DNS providers; AWS Route53, Google CloudDNS, but also other “providers” like CoreDNS.

Why not CoreDNS? #

CoreDNS is the default DNS provider for kuberentes. It provides all the internal mechanisms for service discovery. Additionally it can support a standard zone file. Plus, external-dns supports CoreDNS, why not just use that?

I have two options in this method:

- Setup external-dns to use the cluster deployed CoreDNS DNS

I don’t like this, it feels to me to go beyond the scope of the cluster DNS.

- Deploy a CoreDNS elsewhere and have pi-hole forward local DNS zones to CoreDNS

Because of the existence of providers like CoreDNS. One solution to this problem of mine, would have been to deploy a CoreDNS server next to my pi-hole and forward over to CoreDNS.

In both of these examples there is some level for forwarding involved. I don’t want to mess with that. pi-hole is my main provider, let’s just use that.

And most importantly; why not write your own controller?

Enablement #

In order for me to be do this, some stars had to align. PR 2091 was merged into pi-hole and released only a month ago. Without this, there was potentially a hacky solution using the /etc/hosts/ of pi-hole, and restarting dns. Of course having an API makes this much better. I give thanks to @goopilot.

pifrost, what is it and how does it work? #

I tend to follow a norse naming scheme for my homelab infra. My main hypervisor is yggdrasil. My phones, vidar and dagur.

I decided I wanted this controller to fit into my naming scheme, and devised the prompt, referring to pi-hole and this controller.

If you were a pie, and you possessed otherworldly or external or godlike powers - you/the pie, is a sort of wayfinder beyond to the ethereal plane - what would you call yourself, or itself, the pie? What’s the pie called?

From that I came up with pifrost (off of bifrost). Heimdall, would have been nice but my DNS network appliance already has that name. This tool’s transitory nature also fits better as a bifrost.

Usage #

Reference: https://github.com/tolson-vkn/pifrost#usage

If anything is unclear here, look at the above link. Additionally from that repo an example is found in: deployment/.

For me, and my config:

args:

- server

- --pihole-host=heimdall.tolson.io

- --pihole-token=$(PIHOLE_TOKEN)

I have a Let’s Encrypt cert, and so can use https://, and I do not want the automatic ingress feature. I prefer to put annotations on anything that gets a dns record. It’s not hard to add the annotation, and additionally some of my homelab ingress is actually accessible publically. And while I could host the record locally, I’d prefer to use the same cloud provider DNS at home and away.

If you do not have a cert, the missing flags others may want to consider are --insecure, so http://. And --ingress-auto, which will add every ingress, with or without annontation.

Annotations #

Service Object #

pifrost.tolson.io/domain: foo.tolson.io

The annotation applied to a service object. The loadbalancer IP and annotation domain are sent to pi-hole.

Ingress Object #

pifrost.tolson.io/ingress: "true"

This is what is needed to act on ingress if --ingress-auto is missing.

Demo #

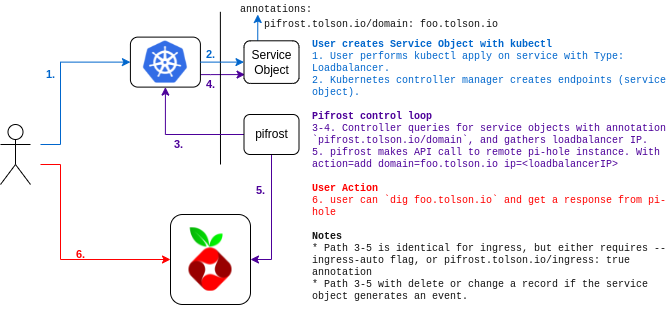

If you want to see pifrost’s visual flow, refer to the image at the top of this post.

Alternative hosting (e.g. On mobile): https://asciinema.org/a/471236

Considerations… #

Don’t run pi-hole inside kubernetes #

I am a big supporter of hosting you entire lab in kubernetes. Whether it’s nextcloud, jellyfin, homeassistant. Run it in kubernetes. But I do not say this for your network DNS.

The joke “It’s always DNS”, is… annoying. In modern software engineering we ask A LOT of DNS, and when it fails we like to complain, but DNS doesn’t, it just keeps on keeping on.

You can certainly run pifrost and pi-hole in your kubernetes cluster. BUT are you prepared to loose DNS network wide? If you loose your pi-hole pods, and they schedule to another node that does not have the image. How will kubernetes fetch the image? If pi-hole is the network DNS and it’s down, it won’t work. While you can set pod specific DNS and hardcode quad 8, this is a mess.

For me and my lab, I choose to run a pi4 8gb for the important bits. Services like: DNS, DNSmasq, (occasionally wireguard). These all run as containers, and in the event of an outage can be moved. But for the most part, it’s a core infra appliance.